- Let's Talk About Tech

- Posts

- There's a race to rein in AI. Who's winning?

There's a race to rein in AI. Who's winning?

Discover why BeReal's popularity plummeted, why social platforms are removing evidence of war crimes, and how Australia hopes to regulate AI.

Hello and welcome back!

Get ready for this instalment of Let’s Talk About Tech because we’ve got a whopper of a rundown:

A lawyer and an eating disorder helpline face consequences after using AI;

Nation leaders everywhere scramble to regulate the technology, and the EU makes significant headway;

Evidence of war crimes is reportedly taken down on social media platforms, prompting questions about censorship, content moderation and human rights violations;

The popularity of BeReal has plummeted, and there’s something to be said about what we, as people, want from our online interactions.

Following on from last edition’s focus on growing AI concerns, we then do a deep dive into what’s being done around the world to regulate this technology 🇦🇺🇨🇳🇮🇹🇪🇺🇸🇬

We hope you enjoy this week’s read and that it, as always, helps spark some important conversations about our evolving world of tech.

THE RUNDOWN

Law and healthcare hit by harmful AI use

Instances of harmful AI usage are on the rise and making headlines everywhere.

Last week, a New York lawyer used ChatGPT to prepare a court filing, which got him into strife after the court discovered bogus legal cases cited throughout the submission.

Days later, it was reported that America’s National Eating Disorder Association (Neda) had to take down a helpline’s AI chatbot that was offering harmful dieting advice to people with eating disorders.

It’s not hard to see how the fallout of these applications of AI could be fatal, or at the very least detrimental to people’s livelihoods and freedoms. That’s why governments around the world are seeking safeguards, mostly in the form of regulation...

EU makes headway with AI regulation, Australia lags behind

Members of the EU Parliament have agreed on a first draft of The AI Act, a regulatory framework operating on a risk-based classification system. Many other countries have also taken steps to strengthen their AI governance in the face of growing social, economic and democratic threats.

Amid all the action, it’s become clear to some that in the proverbial race to AI regulation, Australia is lagging behind.

(Keep reading for a deep dive on the current outlook on AI regulation, both overseas and at home down under.)

Evidence of war crimes reportedly removed on social media

The BBC found that evidence of war crimes is being removed by social media platforms like Facebook and Instagram. When the company attempted to upload footage documenting attacks on civilians in Ukraine, they say it was “swiftly deleted.”

While content moderators (whether they be humans or AI) can remove harmful and illegal content at scale, the removal of war images raises concerns about human rights violations and whether this kind of censorship is really in the public interest.

The topic of content moderation on social media garnered even more news coverage as Twitter’s VP of Trust & Safety (ie. the company’s top content moderation official) resigned just days ago.

BeReal popularity plummets, but why?

In more social media news, photo-sharing platform BeReal has seen a steep decline in user activity that’s making people question our desire for authentic human interactions.

The app saw huge success early on, jumping from 1 million to 20 million users in just seven months. But from October 2022 to March 2023, its daily active users more than halved, falling by 61% to a mere 6 million.

BeReal’s positioning centres on enabling authentic insights into people’s everyday lives, and the work of communication experts say the app’s demise could very well be attributed to the fact that people just don’t want the authenticity it sells. (We’ll leave it to you to interpret what that says about us…)

FOOD FOR THOUGHT

Now, the doom and gloom around AI’s future is infiltrating everything, from our IG feeds to workplace gossip and even our coffee convos. We covered that in our last installment of #LTAT, so now we want to look at how Australia and the world is keeping up with the ongoing calls for regulation.

Firstly, picture AI as a tightrope walker (aka a funambulist) who is currently halfway across the rope, having raced very quickly to get there. If the funambulist continues forward, we will arrive at innovations currently unimaginable. While behind the funambulist is regulation - laws which work to slow and restrain the incredible progression (for better or worse).

To some AI researchers and experts, like Geoffrey Hinton (dubbed the “Godfather of AI”) and Sam Altman (the CEO of OpenAI), this funambulist is walking a tightrope stretched across Niagara falls, where the chance of (potentially existential) catastrophe, without regulation, is very high. Accordingly, nation leaders are taking different approaches to AI governance.

Last month, China 🇨🇳 proposed a draft law to require all AI products to undergo a security assessment prior to their release, citing risks relating to national security and social stability. The proposal also outlines that AI-generated content should “reflect the Socialist Core Values.”

The EU 🇪🇺 issued its first draft of the AI Act which, like Australia’s proposed regulation framework, classifies AI systems by risk and mandates various requirements for development and use. According to the World Economic Forum, The AI Act focuses primarily on strengthening rules around data quality, transparency, human oversight and accountability.

The problem with the EU’s framework however, is the expected implementation delay of 2.5 to 3 years. This means that the interim solution of a Voluntary Code of Conduct, which is currently underway through the Trade and Technology Council led by the EU and US, is all they have in place for now.

On the other hand, Italy 🇮🇹 was the first western country to completely ban ChatGPT back in March. But now, the Italian government hopes to “promote study, research and programming on AI” through a €150 million (AU$243 million) investment fund they’re planning to set up to support startups investing in AI. Meanwhile, Singapore 🇸🇬 has adopted a voluntary, industry-driven approach to regulation.

Bringing the focus closer to home (that’s Australia 🇦🇺, for us writers), the Industry and Science Minister Ed Husic has acknowledged how crucial it is for our regulation to be “consistent with responses overseas”, not just because of the potential $1-4 trillion addition to Australia’s current annual GDP, but also to extend and enhance innovation, under the appropriate safeguards for consumer protection.

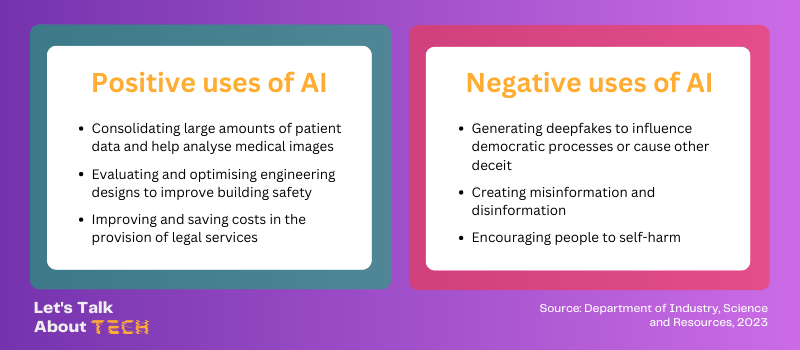

In an attempt to balance innovation and regulation, the Australian Government is focused on a number of positive and negative uses of AI:

So, if you were Minister Husic and were tasked with acknowledging the pull between innovation and regulation in such unfamiliar territory, how would you walk the tightrope?

LOOKING FOR SOMETHING OTHER THAN YOUR #FYP TO KEEP YOU INFORMED?

(That’s ‘for you page’, for the boomers out there.)

If you thought the stuff we covered in this newsletter was interesting and you’re in the mood to dive a little deeper, here are some things we’ve run our ears and eyes over and curated just for you:

Thanks again for talking about tech with us - we hope this helped wrap your head around what’s evolving in the way of AI challenges and opportunities, and has given you an insight into some of the latest social trends.

Stay tuned for the latest news, reviews and more in next fortnight’s newsletter. And why not tell your friends about us? We’re sure they’d love to be in the loop.